Grants:Project/Hjfocs/soweego

![]() This project is funded by a Project Grant

This project is funded by a Project Grant

| proposal | people | timeline & progress | finances | midpoint report | final report |

With soweego, our beloved knowledge base gets in sync with a giant volume of external information, and gets ready to become the universal linking hub of open data.

Project idea

[edit]soweego (solid catalogs and weekee go together) is a fully automatic robot that links existing Wikidata items about people to a set of reliable external catalogs.

soweego aligns different identifiers, but has nothing to do with the Go game!Why: the problem

[edit]Data quality in a broad knowledge base (KB) like Wikidata is a vital aspect to secure confidence on its content, thus encouraging the development of effective ways to consume it. Wikidata is not meant to tell us the absolute truth: instead, we can see it as a container for different points of view (read claims) about every little fact of our world. These claims should always be verified against at least one reference to a trusted external source. However, this still does not seem to be the case. Despite specific efforts such as WikiFactMine[1] and StrepHit,[2] as well as more extensive community endeavors like WikiCite,[3] the lack of references is a critical issue that remains open. Recently, the problem was further highlighted by the Wikidata product manager in her presentation at WikiCite 2017,[4] where the following aspects about the KB statements clearly emerged:

- roughly half of them is totally unreferenced;

- less than a quarter of them has references to non-wiki sources;

- most reference values are internal links to other Wikidata items.

How: the solution

[edit]Alignment of Wikidata to structured databases can represent a complementary alternative to reference mining from unstructured data.[1][2] Think of an identifier as a reference to a Wikidata item: we can give force to the trustworthiness of that item if we manage to match it with the corresponding entry of an authoritative target database.

The main challenge here is to find a suitable match, keeping in mind that labels can be the same, but the entities they represent can be totally different. This is better explained through an example: you are thinking of your favorite singer John Smith. You go to Wikidata and type his name in the search text box. You get back a long list of results: John Smith the biologist, John Smith the politician, etc. Where is John Smith the singer? You find him after a while. Unfortunately, there's not much information in the Wikidata item, so you decide to go to MusicBrainz,[5] hoping you can get something more. You repeat the query there. A ton of other John Smiths appear. You finally find the one you were looking for: it's a match! There is much more data here, so you decide to link it to Wikidata.

soweego does this job for you: it disambiguates a source Wikidata item to a target database entry, at a large scale.

Figure 1 visually describes the solution.

soweego solution. soweego creates a connection (read performs disambiguation) between entries of the source database (Wikidata) and a target database.From a technical perspective, we cast this as an entity linking task, which takes as input a Wikidata item and outputs a ranked list of identifier candidates. The solution has two main advantages:

- it does not poses neither licensing nor privacy bones of contention, since no data import occurs;[6]

- once linking is achieved, additional structured data can be directly pulled from the target identifiers, with no overload for Wikidata.

The approach is best described through the proof of work detailed below.

What: proof of work

[edit]We have uploaded a demonstrative dataset to the Wikidata primary sources tool.[7]

This is a first prototype implementation, where we link a small set of Wikidata items to their MusicBrainz identifiers.

Figure 2 and Figure 3 show screenshots of the tool with soweego data, displayed in a list view and on an item page respectively.

We invite reviewers and interested readers to activate the tool and select the soweego dataset:

d:Wikidata:Primary_sources_tool#Give_it_a_try

The sample proves that the project idea is fully achievable.

SocialLink

[edit]A similar entity linking task (focusing on DBpedia) has already been addressed in our SocialLink system,[8] and assessed by the research community.[9][10]

With soweego, we switch to Wikidata and to different target identifiers: consequently, we foresee to reuse the SocialLink approach as much as possible.

Where: target selection

[edit]To identify a relevant set of target databases, we aimed at mixing direct community feedback together with usage statistics on Wikidata. We reached out to the Wikidata community for a first informal inquiry about which external identifiers are worth covering.[11] Despite the fruitful discussion,[12] we have not got any specific pointers for target candidates so far. Therefore, we detail below evidence which is solely based on statistics, and recognize that the final set of targets should be assessed via more structured community discussion, which is already happening at the time of writing this proposal.[13]

Given these premises, we focus on a specific use case to set the current scope for soweego: Wikidata items about people.

The rationale is that people represent a remarkable slice of the entire KB, namely more than 10% (3.6 millions[14] out of 34 million items).[15] Furthermore, we assume that people are likely to be present on comprehensive external databases.

A complete list of candidate targets based on current usage can be retrieved through SQID:[16] a total of 780 Wikidata properties expect an external identifier as value, which entails the same number of databases. We believe it is reasonable (with respect to the time frame of this proposal) to pick a set of 4 targets from that list, and the choice will be fully driven by community consensus.

Observations on the most used large-scale catalogs

[edit]The most used external catalog is VIAF:[17] it is linked to 25% of people in Wikidata, circa 910 thousands. [18] The Library of Congress (LoC)[19] and GND[20] come right after VIAF,[21] but the coverage drops to 11%, with about 413[22] and 410[23] thousand people respectively. The statistics hold for living people (2.1 million), too.[24] These observations, together with relevant comments by the community,[13] serve as a starting point to further investigate the selection of our final targets.

Project goals

[edit]- G1: to ensure live maintenance of identifiers for people in Wikidata, via link validation;

- G2: to develop a set of linking techniques that align people in Wikidata to corresponding identifiers in external catalogs;

- G3: to ingest links into Wikidata, either through a bot (confident links), or mediated by curation (non-confident links) through the primary sources tool;[7]

- G4: to achieve exhaustive coverage (ideally 100%) of identifiers over 4 large-scale trusted catalogs;

- G5: to deliver a self-sustainable application that can be easily operated by the community after the end of the project.

While we focus on people because of their relevance in the KB, we will build an extensible system that can scale up to other types of items (e.g., organizations, events, pieces of work).

Project impact

[edit]Output

[edit]The deliverable outputs are as follows.

- D1: a reusable link validator, which will be applied to validate existing identifiers of the target databases (goal G1);

- D2: 4 linkers that generate candidate links from people in Wikidata to the target identifiers (goal G2);

- D3: a link merger that can take as input D2 output, as well as any links to external identifiers contributed to Wikidata (goal G1);

- D4: ingestion facilities for automatic or semi-automatic addition of identifiers into Wikidata (goal G3);

- D5: 4 identifier datasets that align the maximum amount of people in Wikidata to the target databases (goal G4);

- D6: a packaged application (virtual machine) that contains the whole

soweegosystem, ready to be operated by the Wikidata community for future use (goal G5).

Outcomes

[edit]Outcome 1: a bridge to major data hubs

[edit]Q: what if reliable external catalogs become queryable from Wikidata?

A: everyone can use Wikidata as a source of federated knowledge.

Q: but isn't Wikidata knowledge already federated?

A: partially, although the community has always been active with respect to this aspect.

Nevertheless, it may take a long time and consistent efforts to maintain identifiers, since it totally depends on the community to (eventually) take care of them.

Besides its in-scope targets (#Project_goals G4), soweego can be reused for new ones, as well as for live maintenance of existing ones.

Therefore, the ultimate outcome of soweego is the creation of a tight connection between Wikidata and trusted target databases.

Outcome 2: a pillar over the reference hole

[edit]In this way, soweego would produce another important impact: essential statements about people (e.g., name, occupation, birth date, picture) can be validated and referenced against the target, thus also tackling the reference gap issue.

Outcome 3: engagement of trusted data providers

[edit]Under a different perspective, soweego helps Wikidata become the central linking hub of open knowledge.

We believe this offers a valuable stimulus to external data providers that were not previously involved in Wikidata.

Once a target database gets exhaustively linked, the benefit for them is two-fold:

- they can automatically obtain data not only from Wikidata itself (think of statements and site links), but also from other interlinked databases;

- their data maintenance burden is dramatically reduced, since it is shifted to Wikidata.

Project metrics

[edit]We foresee to upload the soweego datasets (#Output D5) to Wikidata via the primary sources tool[7] and/or a bot,[26] depending on the estimated precision of the alignment.

In other words, we will use the primary sources tool for non-confident data needing curation by the community, and a bot for confident data.

Hence, the first success metric will be the approval of the bot request.

Concerning data uploaded to the primary sources tool, numerical success can be measured upon the amount of curated statements and the engagement of new users.

These numbers are accessible through the status page.[27]

The soweego datasets should cover 2.1 million items circa, i.e., people with no identifier to the target databases.[28]

At the time of writing this proposal, we cannot estimate the size of the non-confident dataset.

Still, we set the following success values, which should be considered as elastic, and will be eventually adjusted accordingly:

- 20,000 curated statements of the non-confident dataset, counted as the number of approved plus rejected statements;

- 25 new primary sources tool users.

With respect to qualitative metrics, we will create a WikiProject[29] to centralize the discussion over this effort.

3 shared metrics

[edit]The shared metrics[30] below naturally map to the project-defined ones:

- the number of newly registered users can be extracted from the primary sources tool user base;

- the number of of content pages created or improved corresponds to Wikidata statements;

- the total participants will involve people with different backgrounds, mainly software contributors and participants to relevant events like WikiCite, where librarians meet together with researchers, knowledge engineers, and data visualization designers, just to name a few.

Project plan

[edit]Implementation

[edit]

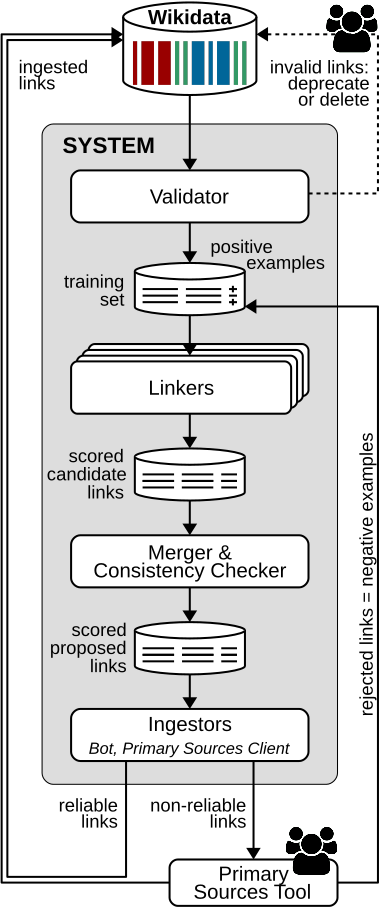

soweego workflowsoweego is a piece of machine learning[31] software for automatic and continuous population of Wikidata.

It takes as source people items (with extensibility to any item type) and as target a set of external database entries.

It generates links between the source and the target, dynamically learning from both existing links and the human feedback (link approval/reject) resulting from manual curation via the primary sources tool.

As shown in Figure 4, soweego is a pipeline of the following components:

- the Validator retrieves existing links in Wikidata and performs sanity checks to validate them (e.g., it verifies that the target identifier is still valid and active). It caters for the live maintenance of links, thus helping the community identify invalid or superseded ones:

- valid links act as positive examples for the training set used by a supervised[32] linking technique;

- invalid links act as negative examples and are made available to the community for deletion or deprecation.

- four Linkers generate scored candidate links for people in the target databases, in the form of

<Wikidata item, property for authority control, external identifier>statements with confidence score and timestamp qualifiers. The SocialLink codebase[33] will serve as a solid starting point for this component. A mechanism for the community to contribute additional linkers will be provided; - the Merger & Consistency Checker analyzes, filters, and consolidates the scored candidate links, producing a merged set of links that are consistent among themselves and with respect to existing ones in Wikidata;

- two Ingestors, namely a Bot and a Primary Sources Client, are responsible for the Wikidata population, either directly for reliable links, or via manual curation with the Primary Sources Tool for non-reliable links.

Finally, ingested links can feed the dynamic training set with positive examples, whereas rejected links can be treated as negative examples. This community feedback loop allows the training set to constantly grow, thus eventually improving the linkers performances.

Work package

[edit]| ID | Title | Objective | Month | Effort |

|---|---|---|---|---|

| T1 | Target selection | Work with the community to identify the set of external databases (goal G4, deliverable D5) | M1-M3 | 10% |

| T2 | Validation | Define validation criteria and develop the validator component (goal G1, deliverable D1) | M1-M3 | 5% |

| T3 | Main Linker | Adapt the SocialLink codebase to build the linker for the target with the highest priority (goal G2, deliverable D2) | M2-M8 | 15% |

| T4 | Linker #2 | Extend the main linker to create one for the second target (goal G2, deliverable D2) | M4-M9 | 10% |

| T5 | Linker #3 | Extend the main linker to create one for the third target (goal G2, deliverable D2) | M7-M12 | 10% |

| T6 | Linker #4 | Extend the main linker to create one for the fourth entries (goal G2, deliverable D2) | M7-M12 | 10% |

| T7 | Merging | Develop the merger component to output high-quality consistent links (goal G1, deliverable D3) | M2-M7 | 12.5% |

| T8 | Ingestion | Develop the Primary Sources Client and the Bot ingestors to populate Wikidata (goal G4, deliverable D4) | M2-M7 | 10% |

| T9 | Operation | Integrate and run the whole pipeline; supervise the ingestion of links (goal G4, deliverable D5) | M4-M12 | 5% |

| T10 | Packaging | Document and package all the components in a portable solution (virtual machine) (goal G5, deliverable D6) | M10-M12 | 7.5% |

| T11 | Community engagement | Promote the project and engage its key stakeholders | M1-M12 | 5% |

N.B.: overlap between certain tasks (in terms of timing) are needed to exploit mutual interactions among them.

Budget

[edit]The total amount requested is 75,238 €.

Budget Breakdown

[edit]| Item | Description | Commitment | Cost |

|---|---|---|---|

| Project leader | In charge of the whole work package | Full time (40 hrs/week), 12 PM(1) | 53,348 € |

| Software architect | Technical implementation head | Full time, 12 PM | 20,690 €(2) |

| Software developer | Assistant for the main linker and linker #2 (T3, T4) | Part time (20 hrs/week), 8 PM | Provided by the hosting research center |

| Software developer | Assistant for linkers #3 and #4 (T5, T6) | Part time, 6 PM | Provided by the hosting research center |

| Dissemination | Registration, travel, board & lodging to 2 relevant community conferences, e.g., WikiCite | Una tantum | 1,200 € |

| Total | 75,238 € |

(1) Person Months

(2) corresponds to 50% of the salary. The rest is covered by the hosting research center

Item costs are broken down as follows:

- the project leader's and the software architect's gross salaries are estimated upon the hosting research center (i.e., Fondazione Bruno Kessler) standard salaries,[34] namely "Ricercatore di prima fascia" (level 1 research scientist) and "Tecnologo/sperimentatore di secondo livello" (level 2 technical assistant) respectively. The salaries comply both with (a) the provincial collective agreement as per the provincial law n. 14,[35] and with (b) the national collective agreement as per the national law n. 240.[36] These laws respectively regulate research and innovation activities in the area where the research center is located (i.e., Trentino, Italy), and at a national level. More specifically, the former position is set to a gross labor rate of 25.65 € per hour, and the latter to 21.55 € per hour. The rates are in line with other national research institutions, such as the universities of Trieste,[37] Firenze,[38] and Roma;[39]

- the software developers are fully provided by the hosting research center;

- the dissemination will either cover 2 nearby events or 1 far event.

N.B.: Fondazione Bruno Kessler will be physically hosting the human resources, but it will not be directly involved into this proposal with respect to the requested budget: the project leader will serve as the main grantee and will appropriately allocate the funding.

Community engagement

[edit]Relevant communities stem from both Wikimedia and external efforts. They are grouped depending on their scope.

Strategic partners

[edit]We share the main goal with the following ongoing or past efforts. As such, collaboration is mutually beneficial.

- Mysociety, via the EveryPolitician project;[40]

- Sum of all paintings project;

- Wikidata Developers team.

Identifier database owners

[edit]soweego would ensure live maintenance of external identifiers.

Therefore, the following relevant subjects can be engaged accordingly.

The list contains the most used catalogs and other that were mentioned in our first inquiry.

It is not exhaustive and may be subject to updates depending on T1 outcomes.

- OCLC organization, responsible for VIAF;

- GND contacts;

- LoC contacts;

- MusicBrainz team;

- ORCID staff;[41]

- German National Library for Economics contacts.[42]

Linking Wikidata to identifiers

[edit]Efforts that are actively working on the addition of identifier references to Wikidata:

Importing identifiers to Wikidata

[edit]Efforts that aim at expanding Wikidata with new items based on external identifiers:

- WikiProject companies;

- WikiProject Universities;

- ContentMine, via the WikiFactMine project.[43]

References

[edit]- ↑ a b m:Grants:Project/ContentMine/WikiFactMine

- ↑ a b m:Grants:IEG/StrepHit:_Wikidata_Statements_Validation_via_References

- ↑ m:WikiCite

- ↑ https://docs.google.com/presentation/d/1XX-yzT98fglAfFkHoixOI1XC1uwrS6f0u1xjdZT9TYI/edit?usp=sharing, slides 15 to 19

- ↑ https://musicbrainz.org/

- ↑

soweegocan still enable imports, but only if the community deems it appropriate for each target database, depending on its terms of use and assessed reliability - ↑ a b c d:Wikidata:Primary_sources_tool

- ↑ http://sociallink.futuro.media/

- ↑ Nechaev, Y., Corcoglioniti, F., Giuliano, C.: Linking knowledge bases to social media profiles. In: Proceedings of 32th ACM Symposium on Applied Computing (SAC 2017).

- ↑ Nechaev, Y., Corcoglioniti, F., Giuliano, C.: SocialLink: Linking DBpedia Entities to Corresponding Twitter Accounts. In: Proceedings of 16th International Semantic Web Conference (ISWC 2017). To appear.

- ↑ https://lists.wikimedia.org/pipermail/wikidata/2017-September/011109.html

- ↑ https://lists.wikimedia.org/pipermail/wikidata/2017-September/thread.html#11109

- ↑ a b Grants_talk:Project/Hjfocs/soweego

- ↑ SPARQL query 1

- ↑ d:Special:Statistics

- ↑ https://tools.wmflabs.org/sqid/#/browse?type=properties

Select datatypeset toExternalId,Used for classset tohuman Q5 - ↑ http://viaf.org/

- ↑ SPARQL query 2

- ↑ http://id.loc.gov/

- ↑ http://www.dnb.de/EN/gnd

- ↑ Note that IMDb locates before GND. However, we do not consider it, since it is a proprietary commercial database

- ↑ SPARQL query 3

- ↑ SPARQL query 4

- ↑

for i in {0..3580}; do curl -G -H 'Accept:text/tab-separated-values' --data-urlencode "query=SELECT * WHERE {?person wdt:P31 wd:Q5 . OPTIONAL {?person wdt:P570 ?death .}} OFFSET ""$((i*1000))"" LIMIT 1000" https://query.wikidata.org/sparql | cut -f2 | grep -c '^[^"]' >> living ; done - ↑ As per m:Grants:Metrics#Instructions_for_Project_Grants

- ↑ d:Wikidata:Requests_for_permissions/Bot

- ↑ https://tools.wmflabs.org/wikidata-primary-sources/status.html

- ↑ #Where:_use_case

- ↑ d:Wikidata:WikiProjects

- ↑ m:Grants:Metrics#Three_shared_metrics

- ↑ en:Machine_learning

- ↑ en:Supervised_learning

- ↑ https://github.com/Remper/sociallink

- ↑ http://hr.fbk.eu/sites/hr.fbk.eu/files/ccpl_28set07_aggiornato_2009.pdf - page 82, Tabella B

- ↑ http://www.consiglio.provincia.tn.it/doc/clex_26185.pdf

- ↑ http://www.camera.it/parlam/leggi/10240l.htm

- ↑ https://www.units.it/intra/personale/tabelle_stipendiali/?file=tab.php&ruolo=RU - 32nd item of the

inquadramentodropdown menu - ↑ http://www.unifi.it/upload/sub/money/2016/td_tabelle_costi_tipologia_B.xlsx

- ↑ https://web.uniroma2.it/modules.php?name=Content&action=showattach&attach_id=15798

- ↑ Grants:Project/EveryPolitician

- ↑ https://lists.wikimedia.org/pipermail/wikidata/2017-September/011117.html

- ↑ https://lists.wikimedia.org/pipermail/wikidata/2017-September/011134.html

- ↑ Grants:Project/ContentMine/WikiFactMine

Get involved

[edit]Participants

[edit]- Marco Fossati

- Marco (AKA Hjfocs) is a research scientist with a double background in natural languages and information technologies. He holds a PhD in computer science at the University of Trento, Italy and works at the Future Media unit at Fondazione Bruno Kessler (FBK).

- He is currently focusing on Wikidata data quality and leads the Wikidata primary sources tool development, as well as the StrepHit project, funded by the Wikimedia Foundation.

- His profile is highly interdisciplinary and can be defined as a keen supporter of applied research in natural language processing, backed by a strong dissemination attitude and an innate passion for open knowledge, all blended with software engineering skills and a deep affection for human languages.

- Claudio Giuliano

- Claudio (AKA Cgiulianofbk) is a researcher with more than 18 years experience in natural language processing and machine learning. He is currently head of the Future Media unit at FBK, focusing on applied research to meet industry needs. He founded and led Machine Linking, a spin-off company incubated at the Human Language Technologies research unit: the main outcome is The Wiki Machine, an open-source framework that performs word sense disambiguation in more than 30 languages by finding links to Wikipedia articles in raw text. Among The Wiki Machine applications, Pokedem is an intelligent agent that analyses tweets to assist human social media managers.

- Yaroslav Nechaev

- Yaroslav (AKA Remper) is a PhD candidate at FBK / University of Trento. He is the creator and maintainer of the SocialLink resource, which will be the starting point for this proposal.

- His main research focus is on natural language processing and deep learning applied to recommender systems-related challenges.

- Francesco Corcoglioniti

- Francesco (AKA Fracorco) is a post-doc researcher in the Future Media unit at FBK, where he combines semantic Web, machine learning, and natural language processing techniques for the analysis, processing, and extraction of knowledge from textual contents. He obtained his PhD in computer science at the University of Trento, Italy, in 2016.

- Advisor Using my experience in matching external data sources with Wikidata to give both strategic and technical advice. Magnus Manske (talk) 09:19, 2 October 2017 (UTC)

- Volunteer I'm a software engineer, and if there's technical assistance I can provide, give me a shout. I've been involved in reporting stuff to en:wikipedia:VIAF/errors in the past, and I'm interested in larger scale management of this data. TheDragonFire (talk) 10:32, 15 October 2017 (UTC)

- Volunteer Helping with ethical supervision of the project. CristianCantoro (talk) 12:18, 9 August 2018 (UTC)

- Volunteer Sociologist of organization input on sociology-issues, involving ethics in academia Piotrus (talk) 09:46, 28 August 2018 (UTC)

- Volunteer Developer Lc fd (talk) 18:01, 9 October 2018 (UTC)

Community notification

[edit]The following links include notifications and related discussions, sorted in descending order of relevance.

- Wikidata

- first investigation on which identifiers to choose: https://lists.wikimedia.org/pipermail/wikidata/2017-September/thread.html#11109

- need for Open Library identifiers: d:Wikidata_talk:Primary_sources_tool/Archive/2017#Open_Library_identifiers

- request of other identifiers addition: d:Wikidata_talk:Primary_sources_tool/Archive/2017#ID_gathering

- call for support: https://lists.wikimedia.org/pipermail/wikidata/2017-September/011186.html

- project chat: d:Wikidata:Project_chat#Soweego:_wide-coverage_linking_of_external_identifiers..09Call_for_support

- WikiCite: https://groups.google.com/a/wikimedia.org/d/msg/wikicite-discuss/MzYxg8S6oIM/QIZT5ov0BwAJ

- Wiki Research: https://lists.wikimedia.org/pipermail/wiki-research-l/2017-September/006046.html

- Wikimedia: https://lists.wikimedia.org/pipermail/wikimedia-l/2017-September/088672.html

- Open Glam: https://lists.okfn.org/pipermail/open-glam/2017-September/001714.html

Endorsements

[edit]Do you think this project should be selected for a Project Grant? Please add your name and rationale for endorsing this project below! (Other constructive feedback is welcome on the discussion page).

![]() Support - This will bring great value, both for Wikidatians and external reusers of data. Ainali (talk) 08:22, 25 September 2017 (UTC)

Support - This will bring great value, both for Wikidatians and external reusers of data. Ainali (talk) 08:22, 25 September 2017 (UTC)

![]() Support - Good use case for the Primary Sources Tool. Endorsement. Tomayac (talk) 12:42, 9 October 2017 (UTC)

Support - Good use case for the Primary Sources Tool. Endorsement. Tomayac (talk) 12:42, 9 October 2017 (UTC)

![]() Strong oppose - This would increasingly turn WikiData into a creepy surveillance tool like Maltego. That alone should be grounds for rejection. Moreover, VIAF & similar identifiers relate directly to professional output, are clearly aligned with the WMF's mission, and are professionally or peer-curated, so the justification for using them in WikiData is clear. Twitter, Facebook, and other commercial social networks do not and are not, so the justification for using them in WikiData is exceedingly weak. Zazpot (talk) 09:25, 26 September 2017 (UTC)

Strong oppose - This would increasingly turn WikiData into a creepy surveillance tool like Maltego. That alone should be grounds for rejection. Moreover, VIAF & similar identifiers relate directly to professional output, are clearly aligned with the WMF's mission, and are professionally or peer-curated, so the justification for using them in WikiData is clear. Twitter, Facebook, and other commercial social networks do not and are not, so the justification for using them in WikiData is exceedingly weak. Zazpot (talk) 09:25, 26 September 2017 (UTC)

- I will consider changing my opposition to support if the following changes to the proposal are made and kept:

- Explicitly state that the project, even if extended, will only ever ingest material from, or create links from Wikimedia-run projects directly to, datasets that are peer-reviewed (e.g. MusicBrainz) or published by accredited academic bodies (e.g. VIAF), and that describe professional output.

- Explicitly state that the project, even if extended, will never ingest material from, or create links from Wikimedia-run projects directly to, social networks, commercial or otherwise.

- Remove all other mentions of social networks, both by name (e.g. "Twitter") and by phrase ("social network(s)" or "social media").

- Create a new section in your proposal explicitly stating that de-anonymisation (aka "doxxing") can cause great harm to its victims, is often unlawful, and that project participants will cease and revert any part of the project that is found to facilitate it, even if this occurs during a future extension of the project. Note in that section that the project's participants are aware of and respect people's legal and moral right to privacy.

- If those changes are made, please WP:PING me, and I will review them and reconsider my opposition. Zazpot (talk) 11:15, 26 September 2017 (UTC)

- I don't think that the proposal is talking about importing data from VIAF, MusicBrainz, Twitter or Facebook but merely to add links or identifiers. With respect to the topic of privacy, there is a discussion on the talk page. --CristianCantoro (talk) 16:28, 26 September 2017 (UTC)

- CristianCantoro, exactly: the proposal is to import (or, if you prefer, add) identifiers (presumably in the form of URIs or IRIs) to Wikidata. And the proposal as it stood before I raised my objection above did indeed propose importing such identifiers from Twitter and Facebook. Such claims could be sensitive. For instance, as originally proposed, soweego might conceivably take a Wikidata entry for a person who is not notable (e.g. a junior scientist who has perhaps published one or two papers but has no desire to be in the public eye), identify their personal (i.e. not professional or work-related) Facebook profile via SocialLink with high confidence, and automatically add a claim to Wikidata that that Facebook profile belongs to that scientist. This automated, public linking together of a person's professional and private lives, without their informed consent, would be highly unethical. The fact that it would (according to the proposal) facilitate deeper mining of that person's data, such as date of birth, profile picture, etc (again, without that person's informed consent) is an example of precisely the Maltego-like doxxing or privacy violation issues I am concerned about. Zazpot (talk) 20:19, 26 September 2017 (UTC)

- Linking to Twitter and Facebook was removed from the proposal before the submission deadline as we believe further discussion with the community is needed, see explanation in the proposal talk page where we also address the four requests above --Fracorco (talk) 15:34, 27 September 2017 (UTC)

- Fracorco, I'm glad to see you explicitly discussing de-anonymization risks, which are indeed important to avoid. However, I am not certain you recognize a slightly different concern. This concern, which I illustrated above, relates to the act of claiming connections between people's onymous personal and professional lives, or between any previously unconnected aspects of a person's private life. No such claim should earnestly be made in public unless either the subject's informed consent has been provably obtained, or the claimant can demonstrate an overwhelming public interest for making the claim in each case. Making such claims in public is not the same thing as de-anonymization, but could still be a form of doxxing.

- It is important to understand that many people who have online profiles are not aware of the ways in which these profiles risk their personal data being aggregated or mined. In some cases, people might not even have published that information intentionally (e.g. they might have thought that they were creating a private profile, or their children might have created the profile for them without their knowledge, or whatever). This is one of the reasons why it is unethical to assume informed consent even if the information is publicly accessible.

- Also, there is a risk that Soweego (or the humans vetting its suggestions via the PST) will make mistakes, and claim untrue things about people. Besides the chance that such a claim might conceivably constitute defamation, there is a real possibility that it will flow into other databases created as derivative works of Wikidata. Because Wikidata is CC0-licensed, such claims in derivative databases would not necessarily have their sources declared, and could end up in all sorts of places (search engine results? unscrupulous employer screening databases?) that could cause the subject much irritation for years. Correcting a false claim in every derivative database might prove impossible for the victims. (For comparison, when credit agencies like Equifax accidentally add false claims to a person's record, it can take substantial effort for the victim to confirm and, finally, to correct these claims. And that is just one database.)

- I am aware, and am relieved, that the proposal was edited such that the third item on my list above has been satisfied. If the remaining three items also come to be satisfied, I will gladly reconsider my strong opposition. Zazpot (talk) 20:21, 27 September 2017 (UTC)

- @Zazpot, please see my answer to you in the discussion page. --CristianCantoro (talk) 11:04, 29 September 2017 (UTC)

- I don't think that the proposal is talking about importing data from VIAF, MusicBrainz, Twitter or Facebook but merely to add links or identifiers. With respect to the topic of privacy, there is a discussion on the talk page. --CristianCantoro (talk) 16:28, 26 September 2017 (UTC)

- From what I seen Wikidata is not filled with Facebook and Twitter accounts. D1gggg (talk) 00:20, 25 October 2017 (UTC)

![]() Support Wikidata and identifiers are a match made in even, they are harmless when not useful and very helpful when they indeed are. Aubrey (talk) 14:18, 28 September 2017 (UTC)

Support Wikidata and identifiers are a match made in even, they are harmless when not useful and very helpful when they indeed are. Aubrey (talk) 14:18, 28 September 2017 (UTC)

![]() Support Very interesting ed usefull project --Susanna Giaccai 14:21, 28 September 2017 (UTC)

Support Very interesting ed usefull project --Susanna Giaccai 14:21, 28 September 2017 (UTC)

![]() Neutral I am a bit torn on this subject. I am a big fan of adding identifiers to Wikidata, and I do it all the time by transferring identifiers from Commons or using excellent existing tools (AuthorityControl java script) to search VIAF. Any bot that does it automatically would be great but I am not convinced that it can be done automatically. For VIAF that is all they do why build a new tool when often once you find VIAF page it already has a link to Wikidata. I would propose not to invest in very complicated matching algorithms but focus on easy matches (match names and dates of birth and death) and run the bot to constantly link wikidata to VIAF, RKD (Rijksbureau voor Kunsthistorische Documentatie), Benezit and other identifiers to content-rich databases and than parse data from those databases and upload it to Wikidata with proper references or add references of data is already there. We should also improve existing tools for manual wikidata editing it should be easier to search VIAF, RKD, Benezit, Musicbrains and all the other databases for a match and than ask human to approve or reject them. It would be also great if we could add references to existing statements by somehow saying I got it from this or that identifier. Finally I am sorry to say but I did not found d:Wikidata:Primary sources tool usable at this stage, the tool as far as I can tell does not work, and I tried it for a while. As A result I do not have high confidence that extensions to that tool will work either. I would not support extension of the tool until current version actually work. You can measure success by tracking how many edits were done with the help of the tool. Jarekt (talk) 02:32, 29 September 2017 (UTC)

Neutral I am a bit torn on this subject. I am a big fan of adding identifiers to Wikidata, and I do it all the time by transferring identifiers from Commons or using excellent existing tools (AuthorityControl java script) to search VIAF. Any bot that does it automatically would be great but I am not convinced that it can be done automatically. For VIAF that is all they do why build a new tool when often once you find VIAF page it already has a link to Wikidata. I would propose not to invest in very complicated matching algorithms but focus on easy matches (match names and dates of birth and death) and run the bot to constantly link wikidata to VIAF, RKD (Rijksbureau voor Kunsthistorische Documentatie), Benezit and other identifiers to content-rich databases and than parse data from those databases and upload it to Wikidata with proper references or add references of data is already there. We should also improve existing tools for manual wikidata editing it should be easier to search VIAF, RKD, Benezit, Musicbrains and all the other databases for a match and than ask human to approve or reject them. It would be also great if we could add references to existing statements by somehow saying I got it from this or that identifier. Finally I am sorry to say but I did not found d:Wikidata:Primary sources tool usable at this stage, the tool as far as I can tell does not work, and I tried it for a while. As A result I do not have high confidence that extensions to that tool will work either. I would not support extension of the tool until current version actually work. You can measure success by tracking how many edits were done with the help of the tool. Jarekt (talk) 02:32, 29 September 2017 (UTC)

![]() Support per Aubrey above. --CristianCantoro (talk) 10:56, 29 September 2017 (UTC)

Support per Aubrey above. --CristianCantoro (talk) 10:56, 29 September 2017 (UTC)

![]() Support I'd like to support this project from the Wikidata dev team's side. I think this will be useful for Wikidata for two reasons:

Support I'd like to support this project from the Wikidata dev team's side. I think this will be useful for Wikidata for two reasons:

- Having more identifiers in Wikidata makes Wikidata significantly more useful. It allows finding items in Wikidata by their identifiers for more catalogs. It also interlinks us with more sources on the linked open data web. This makes it easy to find additional data about a topic without the need to have all of this data in Wikidata itself.

- We are exploring checking Wikidata's data against other databases in order to find mismatches that point to issues in the data. Having more connections via identifiers is a precondition for that.

--Lydia Pintscher (WMDE) (talk) 11:19, 29 September 2017 (UTC)

![]() Support As user of Wikidata, involved in Identifiers (WLM ID), I strongly support projects like this. --CristianNX (talk)

Support As user of Wikidata, involved in Identifiers (WLM ID), I strongly support projects like this. --CristianNX (talk)

![]() Support This proposal aims to make our haphazard efforts in this area more methodical, and off-load as much work as possible to machines, without compromising quality. I have tools to handle small and medium-size third-party datasets, but the "big ones" need alternative approaches. --Magnus Manske (talk)

Support This proposal aims to make our haphazard efforts in this area more methodical, and off-load as much work as possible to machines, without compromising quality. I have tools to handle small and medium-size third-party datasets, but the "big ones" need alternative approaches. --Magnus Manske (talk)

![]() Weak support I echo some of the concerns expressed above concerning privacy and ability to do many of these things automatically. On the privacy end, perhaps the automated work should focus on those who are verifiably deceased, and try to avoid living people. From my experience in manually matching thousands of records between wikidata and external databases, there are no good heuristics that work consistently. Name closeness is highly dependent on what the names are and the language/culture (some portions of names are very common, some short strings in names are almost unique, some names have alternate spellings that mean the same, but sometimes a single letter different means something completely different). Pretty much the same issues with geographic cues. Even when items are linked by identifiers, sometimes the match doesn't make sense - people often link their identifiers to wikipedia pages that may be about related but not quite exactly the same things... All that said, a lot can be done with automatic tools when you really are sure things are working correctly, and the general approach here seems sensible. So I do support funding this project, but I feel we made need to be careful and not over-promise about what it will actually accomplish. ArthurPSmith (talk) 14:12, 30 September 2017 (UTC)

Weak support I echo some of the concerns expressed above concerning privacy and ability to do many of these things automatically. On the privacy end, perhaps the automated work should focus on those who are verifiably deceased, and try to avoid living people. From my experience in manually matching thousands of records between wikidata and external databases, there are no good heuristics that work consistently. Name closeness is highly dependent on what the names are and the language/culture (some portions of names are very common, some short strings in names are almost unique, some names have alternate spellings that mean the same, but sometimes a single letter different means something completely different). Pretty much the same issues with geographic cues. Even when items are linked by identifiers, sometimes the match doesn't make sense - people often link their identifiers to wikipedia pages that may be about related but not quite exactly the same things... All that said, a lot can be done with automatic tools when you really are sure things are working correctly, and the general approach here seems sensible. So I do support funding this project, but I feel we made need to be careful and not over-promise about what it will actually accomplish. ArthurPSmith (talk) 14:12, 30 September 2017 (UTC)

![]() Support --Hadi

Support --Hadi

![]() Support Gamaliel (talk) 03:16, 2 October 2017 (UTC)

Support Gamaliel (talk) 03:16, 2 October 2017 (UTC)

![]() Oppose

Authority coreferencing is extremely important to me (see Wikidata:WikiProject_Authority_control: notified that project so people can comment here). Regretfully, I think this proposal has many flaws, see detailed discussion at Grants_talk:Project/Hjfocs/soweego#Proposal_shortcomings --Vladimir Alexiev (talk) 09:34, 2 October 2017 (UTC)

Oppose

Authority coreferencing is extremely important to me (see Wikidata:WikiProject_Authority_control: notified that project so people can comment here). Regretfully, I think this proposal has many flaws, see detailed discussion at Grants_talk:Project/Hjfocs/soweego#Proposal_shortcomings --Vladimir Alexiev (talk) 09:34, 2 October 2017 (UTC)

![]() Support --Chiara The project allows to include references - maybe not verified -, doesn't include in other national or international authority tools, but very useful to identify people. The only thing that matters is to declare that are references not verified!

Support --Chiara The project allows to include references - maybe not verified -, doesn't include in other national or international authority tools, but very useful to identify people. The only thing that matters is to declare that are references not verified!

- as outlined in my comments on the talk page I think this proposal is a bit premature. But I would likely support the proposal if submitted for the next round (at this point the Primary Sources Tool should be fully functional). − Pintoch (talk) 09:19, 4 October 2017 (UTC)

- Tools for creating mappings between Wikidata and authorities/knowledge organization systems are very relevant for my work. However, I think various issues raised here and on the talk page (particularly how privcacy concerns can be addressed in the proposed workflows, and the shortcomings of the proposal in its current state as noted by Vladimir Alexiev) should be considered and integrated into a reworked version of the proposal. Jneubert (talk) 11:03, 6 October 2017 (UTC)

![]() Support As per Lydia Pintscher above. I also want to vouch, for what's worth, for the grantee: he already did a good work with his StreptHit project, and I'm certain he'll continue to provide us with useful tools to get a better Wikidata going. Sannita - not just another it.wiki sysop 13:30, 7 October 2017 (UTC)

Support As per Lydia Pintscher above. I also want to vouch, for what's worth, for the grantee: he already did a good work with his StreptHit project, and I'm certain he'll continue to provide us with useful tools to get a better Wikidata going. Sannita - not just another it.wiki sysop 13:30, 7 October 2017 (UTC)

![]() Support The output of this project would be very useful indeed for a plethora of initiatives- Essepuntato (talk) 16:45, 8 October 2017 (UTC)

Support The output of this project would be very useful indeed for a plethora of initiatives- Essepuntato (talk) 16:45, 8 October 2017 (UTC)

![]() Support It's a very important initiative that definitely needs to move forward in order to improving Wikidata as a central knowledge hub. But I share the concern raised by Pintoch, we need to have Primary Sources working well to make this proposal fully functional. Tpt (talk) 15:42, 10 October 2017 (UTC)

Support It's a very important initiative that definitely needs to move forward in order to improving Wikidata as a central knowledge hub. But I share the concern raised by Pintoch, we need to have Primary Sources working well to make this proposal fully functional. Tpt (talk) 15:42, 10 October 2017 (UTC)

![]() Support Clearly the data items need to have these links to other authorities in order to reduce ambiguity, and to allow for the validation of other claims. As we are, I can see that many of the claims I am making by hand, could be easily done and verified by automated means. Given the large scale of wikidata, teh more work done automatically the better. Leaving only the problematic claims to be done by humans. I think Hjfocs is very well suited to this type of work. Danrok (talk) 18:19, 10 October 2017 (UTC)

Support Clearly the data items need to have these links to other authorities in order to reduce ambiguity, and to allow for the validation of other claims. As we are, I can see that many of the claims I am making by hand, could be easily done and verified by automated means. Given the large scale of wikidata, teh more work done automatically the better. Leaving only the problematic claims to be done by humans. I think Hjfocs is very well suited to this type of work. Danrok (talk) 18:19, 10 October 2017 (UTC)

![]() Support The overall strategy appears sound to improve the rate of identifier linking and vetting of match quality. I strongly support this project. The linked Identifier creates a network effect of value growth for WikiData and since human vetting still takes place on the questionable match quality matches this appears to create a valuable foundation for the growth of linked data and semantic work. Wolfgang8741 (talk) 23:41, 10 October 2017 (UTC)

Support The overall strategy appears sound to improve the rate of identifier linking and vetting of match quality. I strongly support this project. The linked Identifier creates a network effect of value growth for WikiData and since human vetting still takes place on the questionable match quality matches this appears to create a valuable foundation for the growth of linked data and semantic work. Wolfgang8741 (talk) 23:41, 10 October 2017 (UTC)

![]() Support identifier linking tools are alwasy very useful! I hope it will be accessible to people not familiar with Wikidata. Nattes à chat

Support identifier linking tools are alwasy very useful! I hope it will be accessible to people not familiar with Wikidata. Nattes à chat

![]() Support Useful. ~ Moheen (keep talking) 18:07, 11 October 2017 (UTC)

Support Useful. ~ Moheen (keep talking) 18:07, 11 October 2017 (UTC)

![]() Support - This will be good, nice tools will be good. --Frettie (talk) 06:46, 12 October 2017 (UTC)

Support - This will be good, nice tools will be good. --Frettie (talk) 06:46, 12 October 2017 (UTC)

![]() Support Csyogi (talk) 14:42, 14 October 2017 (UTC)

Support Csyogi (talk) 14:42, 14 October 2017 (UTC)

- Something like this needed for maintenance of the data. Nizil Shah (talk) 05:59, 12 October 2017 (UTC)

- This can save a lot of manual work, that is also possible to do with a bot-account. I support this project! QZanden (talk) 22:43, 14 October 2017 (UTC)

![]() Support Positive. Yeza (talk) 04:36, 15 October 2017 (UTC)

Support Positive. Yeza (talk) 04:36, 15 October 2017 (UTC)

![]() Support It seems to me that it can be a really useful project. Uomovariabile (talk to me) 06:58, 16 October 2017 (UTC)

Support It seems to me that it can be a really useful project. Uomovariabile (talk to me) 06:58, 16 October 2017 (UTC)

![]() Support very good tool to improve data quality with reliable references Projekt ANA (talk) 19:33, 16 October 2017 (UTC)

Support very good tool to improve data quality with reliable references Projekt ANA (talk) 19:33, 16 October 2017 (UTC)

- per Magnus Manske. Quiddity (talk) 20:10, 16 October 2017 (UTC)

- Because of more linkin items. Crazy1880 (talk) 15:28, 21 October 2017 (UTC)

SupportPer Lydia: "Having more identifiers in Wikidata makes Wikidata significantly more useful". Yiyi (talk) 22:30, 21 October 2017 (UTC)

SupportPer Lydia: "Having more identifiers in Wikidata makes Wikidata significantly more useful". Yiyi (talk) 22:30, 21 October 2017 (UTC)- a useful tool no doubt Oluwa2Chainz (talk) 16:52, 23 October 2017 (UTC)

![]() Support I think it is very useful to develop further interlinking algorithms for Wikidata, and the fact the Magnus Manske pointed out that new solutions are needed for big data sets suggests that this project can fill in a gap.

Support I think it is very useful to develop further interlinking algorithms for Wikidata, and the fact the Magnus Manske pointed out that new solutions are needed for big data sets suggests that this project can fill in a gap.

- I also think that the proposed consistency checking step is interesting.

- I would like to see a solution that not only focuses on aligning people, but rather any type of Wikidata item, but I can understand that it is easier to focus on one type first.

- I think it would also be convenient to integrate existing solutions tighter -- if these algorithms require human validation, it might make sense to request such human input via Mix'n'Match, as much as possible. My point is that, it might be good to centralise some use cases in already successful tools. Criscod (talk) 08:24, 24 October 2017 (UTC)

![]() Support Hopefully we would get ways to get reasonable coverage quickly. Control over "frequency of mistakes" would be useful, but probably difficult to implement. Other projects have more specific goals, different from this project. D1gggg (talk) 00:16, 25 October 2017 (UTC)

Support Hopefully we would get ways to get reasonable coverage quickly. Control over "frequency of mistakes" would be useful, but probably difficult to implement. Other projects have more specific goals, different from this project. D1gggg (talk) 00:16, 25 October 2017 (UTC)

![]() Support Seems like a useful project that could increase the amount of referenced entities. Mikeshowalter (talk) 22:19, 25 October 2017 (UTC)

Support Seems like a useful project that could increase the amount of referenced entities. Mikeshowalter (talk) 22:19, 25 October 2017 (UTC)

![]() Wait

, need to resolve privacy issues as mentioned by Zazpot. If it is only use to check and merge wikidata doubles, that's OK. Need to resolve issues from here: Grants_talk:Project/Hjfocs/soweego#Privacy issues with personal information first Popolon (talk) 12:47, 26 October 2017 (UTC)

Wait

, need to resolve privacy issues as mentioned by Zazpot. If it is only use to check and merge wikidata doubles, that's OK. Need to resolve issues from here: Grants_talk:Project/Hjfocs/soweego#Privacy issues with personal information first Popolon (talk) 12:47, 26 October 2017 (UTC)

- Great project, it will be a great tool to Wikidata. Escudero (talk) 18:15, 26 October 2017 (UTC)

Support We need such good tools for Wikidata. Conny (talk) 20:34, 26 October 2017 (UTC)

Support We need such good tools for Wikidata. Conny (talk) 20:34, 26 October 2017 (UTC) Support Tools like this are really vital to make Wikidata a good resource, and existing tools actually do what they set out to do well. I actually think it's pretty worthwhile to link social media sites for certain people--public figures, entertainers--but I can see how this tool isn't the best way to do this and it's good this is going to be very focused on identifiers, since adding them is a necessary step to improve Wikidata and needs a new approach. —innotata 23:59, 26 October 2017 (UTC)

Support Tools like this are really vital to make Wikidata a good resource, and existing tools actually do what they set out to do well. I actually think it's pretty worthwhile to link social media sites for certain people--public figures, entertainers--but I can see how this tool isn't the best way to do this and it's good this is going to be very focused on identifiers, since adding them is a necessary step to improve Wikidata and needs a new approach. —innotata 23:59, 26 October 2017 (UTC)- I support this proposal. However, the time and budget plan seem very ambitious. Thus I would recommend applying for external funding also. 89.204.135.140 06:59, 27 October 2017 (UTC)

Support I think efforts to link Wikidata to existing semantic datasets are very valuable. --A3nm (talk) 09:05, 31 October 2017 (UTC)

Support I think efforts to link Wikidata to existing semantic datasets are very valuable. --A3nm (talk) 09:05, 31 October 2017 (UTC) Support Seems like a good idea. --Piotrus (talk) 06:46, 19 August 2018 (UTC)

Support Seems like a good idea. --Piotrus (talk) 06:46, 19 August 2018 (UTC) Support This would be a critical addition to the mission and work of Wikidata! Great work so far! Todrobbins (talk) 16:47, 4 March 2020 (UTC)

Support This would be a critical addition to the mission and work of Wikidata! Great work so far! Todrobbins (talk) 16:47, 4 March 2020 (UTC)