As a mismatch provider I want to provide accurate mismatches concerning date values via the csv import.

Problem:

Mismatch Finder currently does not take into account the calendar model and precision of dates when importing mismatches via csv, but they are fairly important for figuring out the exact meaning of a date.

Current state:

- We accept iso-style dates in the wikidata_value column on the csv - without calendar model and precision

Desired state in the future:

- To enable different precision for dates, we would like to be able to infer the dates precision from the format that it is given in: e.g. 1950s would infer a date with a precision of decade (8)

- To enable different calendar models, we would like to infer the most likely calendar model in the same way Wikibase does it. When the mismatch provider wants to overwrite this, we want to give them the option to do that by providing the calendar model as an additional field in the csv import.

Example:

Different Precisions:

- 2022-07-14 -> precision day

- 1950-05 -> precision month

- 1950 -> precision year

- 1950s -> precision decade

- 19. century -> precision century

- 2. millennium -> precision millennium

- 2022-00-00 -> precision year

- 1950-05-00 -> precision month

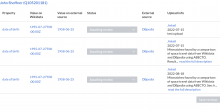

Explicit Calendar Model:

statement_guid,property_id,wikidata_value,meta_wikidata_value,external_value,external_url Q184746$7814880A-A6EF-40EC-885E-F46DD58C8DC5,P569,1046-04-03,Q12138,3 April 1934,http://fake.source.url/12345

Implicit Calendar Model:

statement_guid,property_id,wikidata_value,meta_wikidata_value,external_value,external_url Q184746$7814880A-A6EF-40EC-885E-F46DD58C8DC5,P569,1934-04-03,,3 April 1934,http://fake.source.url/12345

BDD

GIVEN

AND

WHEN

AND

THEN

AND

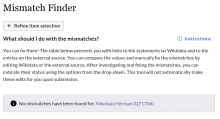

Acceptance criteria:

- documentation and example for mismatch providers is updated

- various date formats are accepted as listed above and the precision is inferred automatically

- various dates are accepted and the calendar model is inferred in the same way as Wikibase does it, unless the calendar model is explicitly specified in the mismatch

Notes:

The above only applies to the Wikidata side of the mismatch ("wikidata_value" in the uploaded CSV). The external source side ("external_value") can put into their value whatever they want incl. the name of the calendar model.